[Iter-X] 53/100days

Day 5️⃣3️⃣

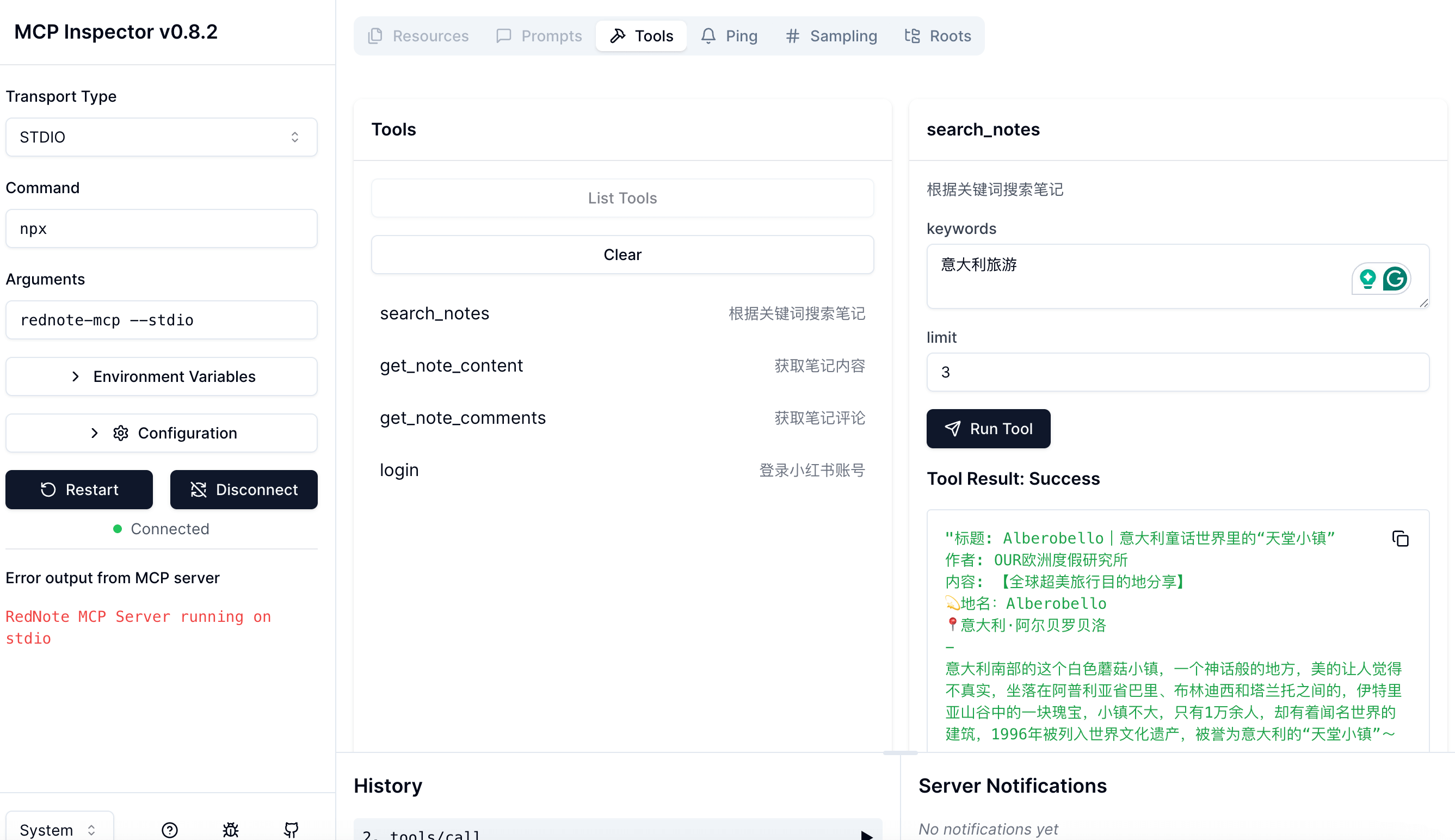

Today I spent some time building a RedNote mcp-server using Playwright. It can later be used by agents to fetch note content. Cursor is truly a lifesaver — I even used playwright-mcp to let it navigate pages, extract content, and write code all on its own. Sometimes I just copy an element in the browser and paste it, and it writes the selector automatically. Thinking back to the pre-AI days of using Selenium or Playwright manually was a nightmare — not only did you have to locate elements yourself, but you also had to test thoroughly to make sure everything worked. In this aspect, I really respect AI.

When it comes to AI browsing and scraping web content, besides the typical element-based methods like Playwright, here are the common approaches:

1️⃣ Directly fetching via URL — this is the easiest to get blocked by anti-scraping systems, since many websites now detect abnormal user behavior.

2️⃣ Simulating real user behavior via headless browsers — this is the approach I used earlier. It’s reliable in certain ways and relatively low cost. The downside is that if the page structure or elements change, you need to update your logic.

3️⃣ Browser-use powered by vision + LLMs — this is the latest approach. By injecting JS to label interactive elements on the page, LLMs use multimodal reasoning to decide their next move. The biggest pro: it barely requires any manual adjustment, closely mimicking human behavior. The con: it’s expensive.

Current Progress Summary:

- UI/UX Design: 33%

- Backend (Go): 53%

- Client-side (Flutter): 42%

- Data: 12%

If you think you meet the following criteria, let’s talk:

- Can stay committed

- Have a dream

- Interested and passionate

Enjoy Reading This Article?

Here are some more articles you might like to read next: